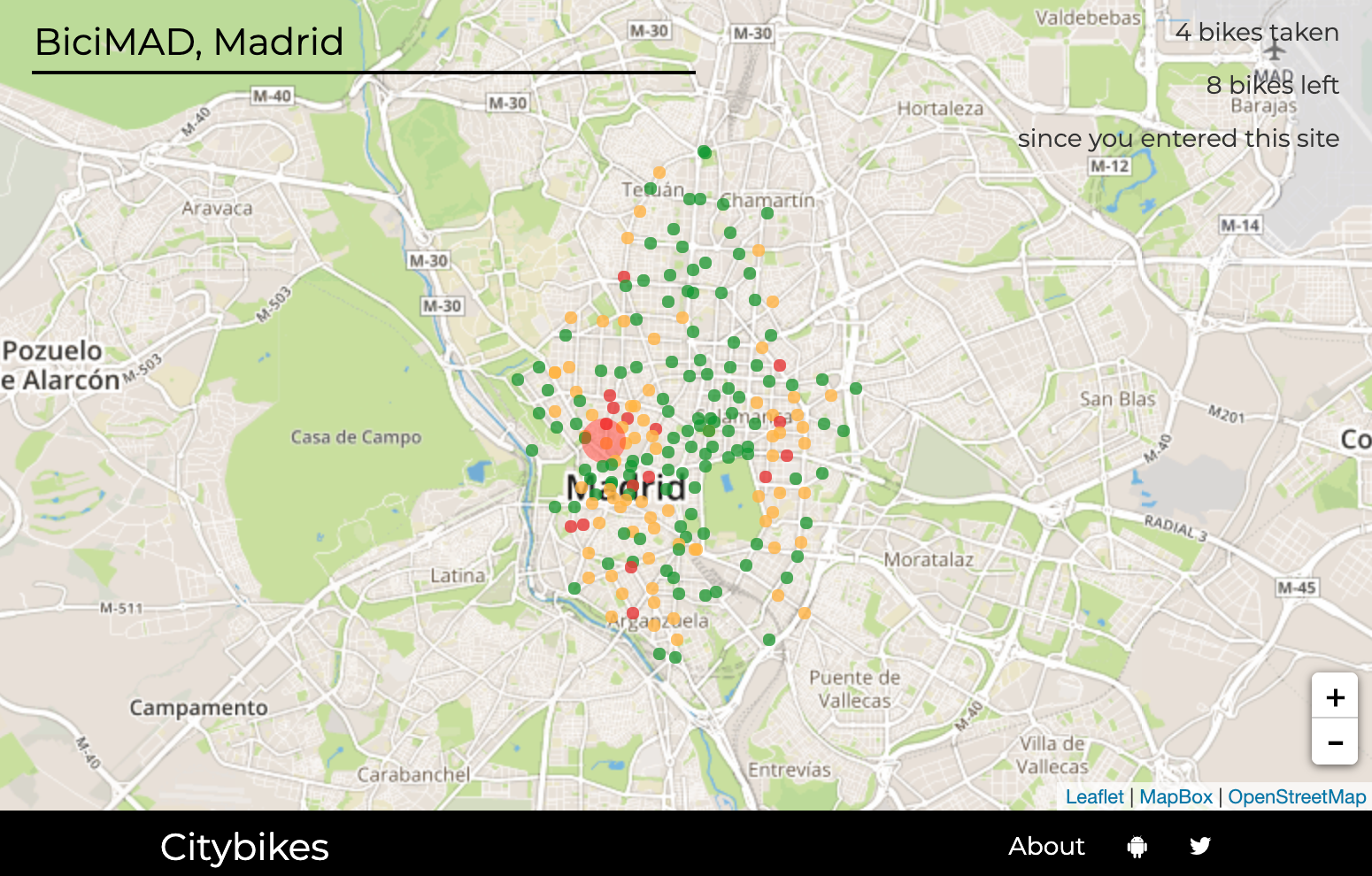

This month in Citybikes #202510

It’s been a slow month, but here’s another take at the monthly changelog!

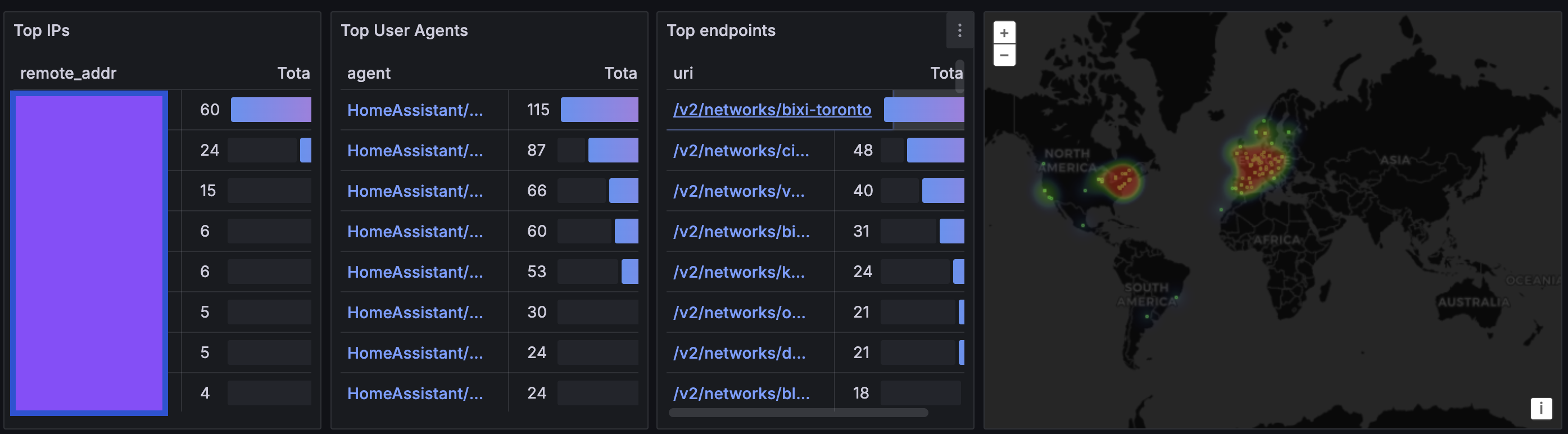

Home Assistant and Citybikes

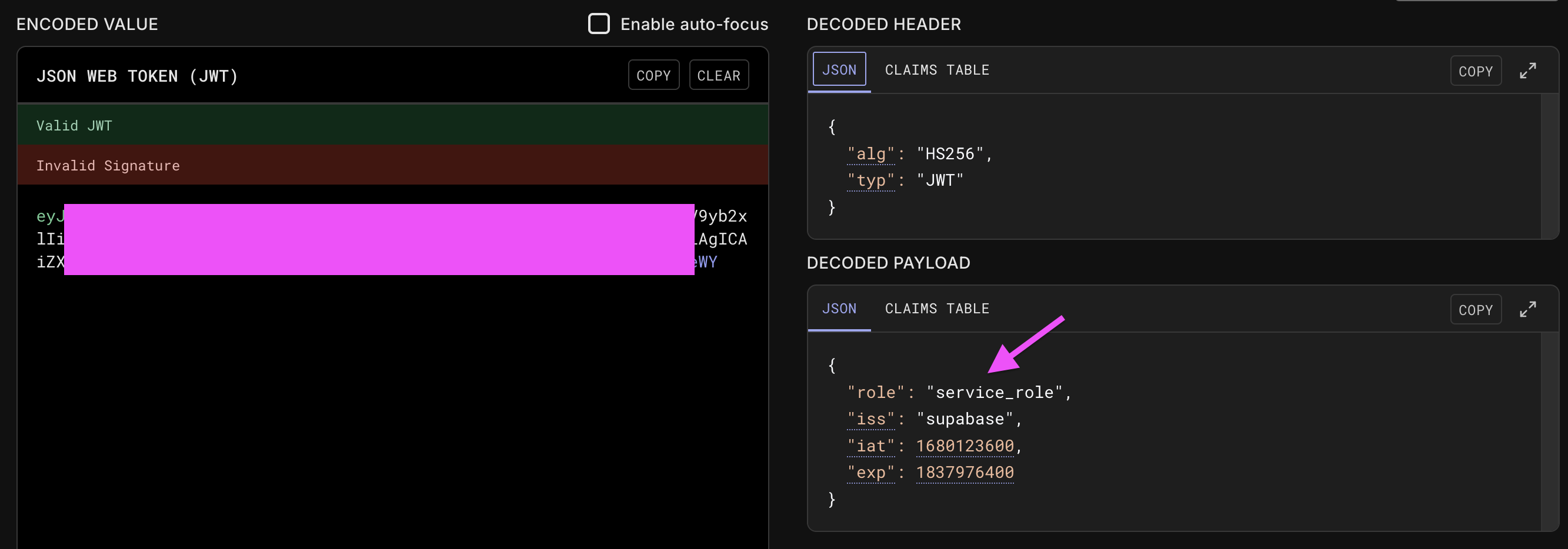

Ever since HA 0.49 (that was long ago, 2017!), home assistant ships with a Citybikes integration. Over time the project has evolved in architecture and, over time, the integration has become a legacy piece of code. Legacy, in this case, means it cannot be updated with new features until it conforms to a minimum set of requirements. There’s been multiple attempts to add information about electric bikes on the integration over time (#82945 #85279 #96764) but the result was always the same. Any integration must use a third party library to access a remote API.

I think Home Assistant in Citybikes is a very cool thing to have, so it was time to at least do the first steps into bringing out the integration out of legacy.

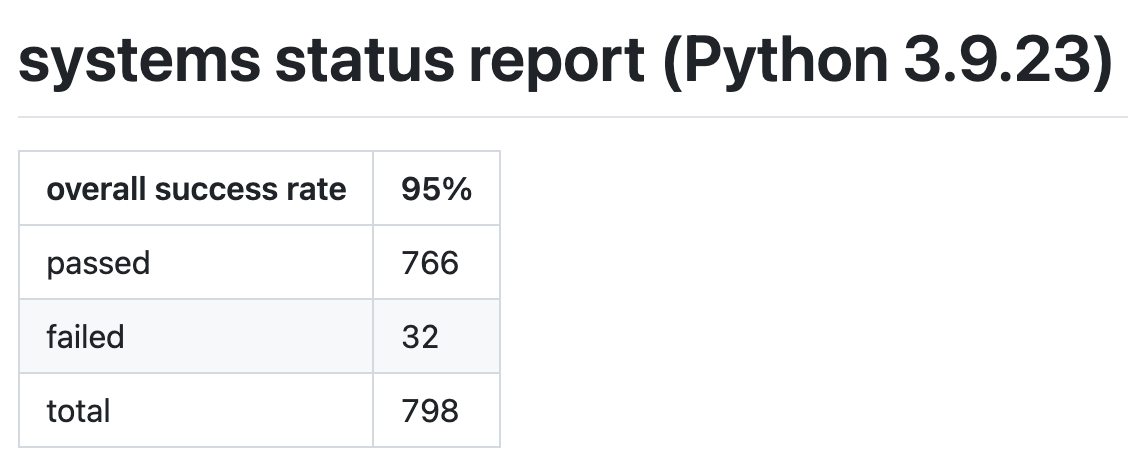

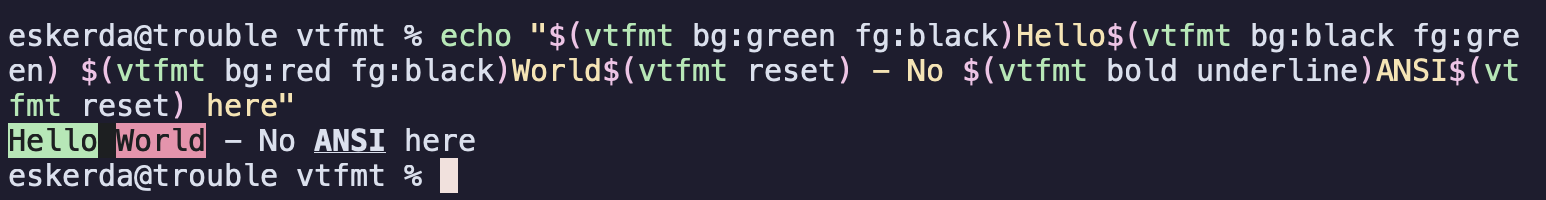

Back in 2016 I wrote a minimal python client, python-citybikes for the citybikes api to use in cmdbikes. It’s not a particularly well done library, and I believe some parts leave a bit to be desired, but overall it works ok. To use it in HA, I still needed to bring the library back to the future of 2025: asyncio support and being at it, a workflow to publish it to pypi.

Being a bit lazy with re-writing the library, I went with adding a separate asyncio module that maintains the same interface. I have seen other projects take this path and I think it’s the most straightforward way of doing it without worrying about the colour of your functions. I might go back at it to make the library try to be less smart, maybe in 10 years :)

With this, I could work a minimal pull request to Home Assistant: https://github.com/home-assistant/core/pull/151009

Removed this blocker, I hope the Citybikes integration in HA will keep evolving, and as far as I know, that’s already in the works. So, cheers to that!

Quick way to scaffold a new pybikes integration

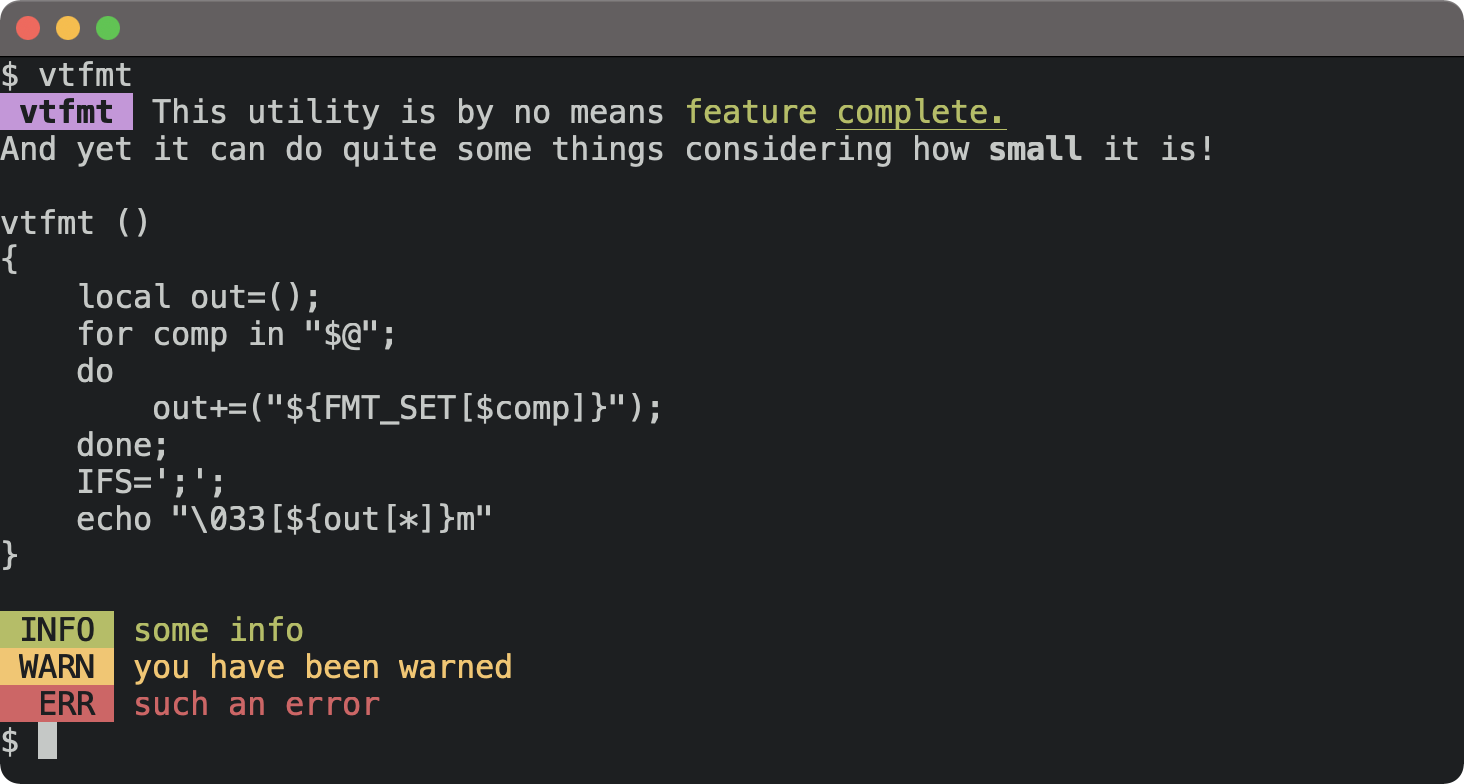

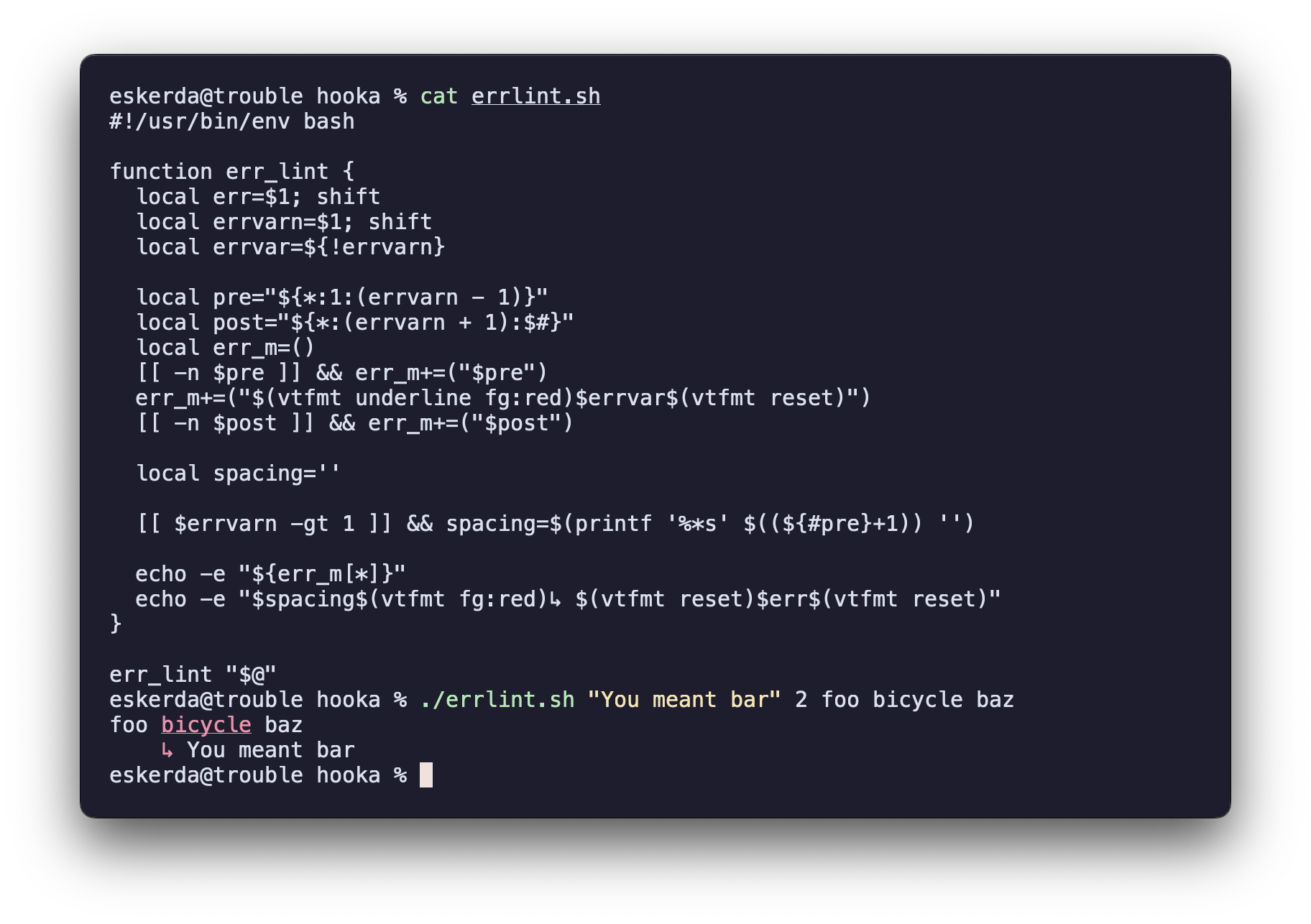

While working on HA, I noticed their scaffolding script for new integrations and I kind of liked it as a way to quick start things. On pybikes, the approach is to copy paste a system implementation that looks sane and go with that. A problem with this is that it replicates patterns that might not be the best way of doing things (which at the same time means reviews get extra scrutiny).

Inspired by the success of HA on getting integrations I added a scaffolding script to pybikes, which is now the recommended way of starting things. On being run, it generates a valid implementation of a made up system with a static data feed.

$ python -m utils.scaffold example

================================================

Here is your 'Example' implementation

System: pybikes/example.py

Data: pybikes/data/example.json

Run tests by:

$ pytest -k Example

Visualize result:

$ make map! T_FLAGS+='-k Example'

Happy hacking :)

================================================

Being this no replacement for proper documentation, I still believe in code that documents itself, not by docstrings, but by being simple and clear. Of course, that might only work for the simple cases, but at least that’s my spirit.

Open Transport Community Conference 2025

I spent some days in Vienna to attend the first edition of the Open Transport Community Conference, the (un)conference about Free Software and Open Data for mobility and public transport.

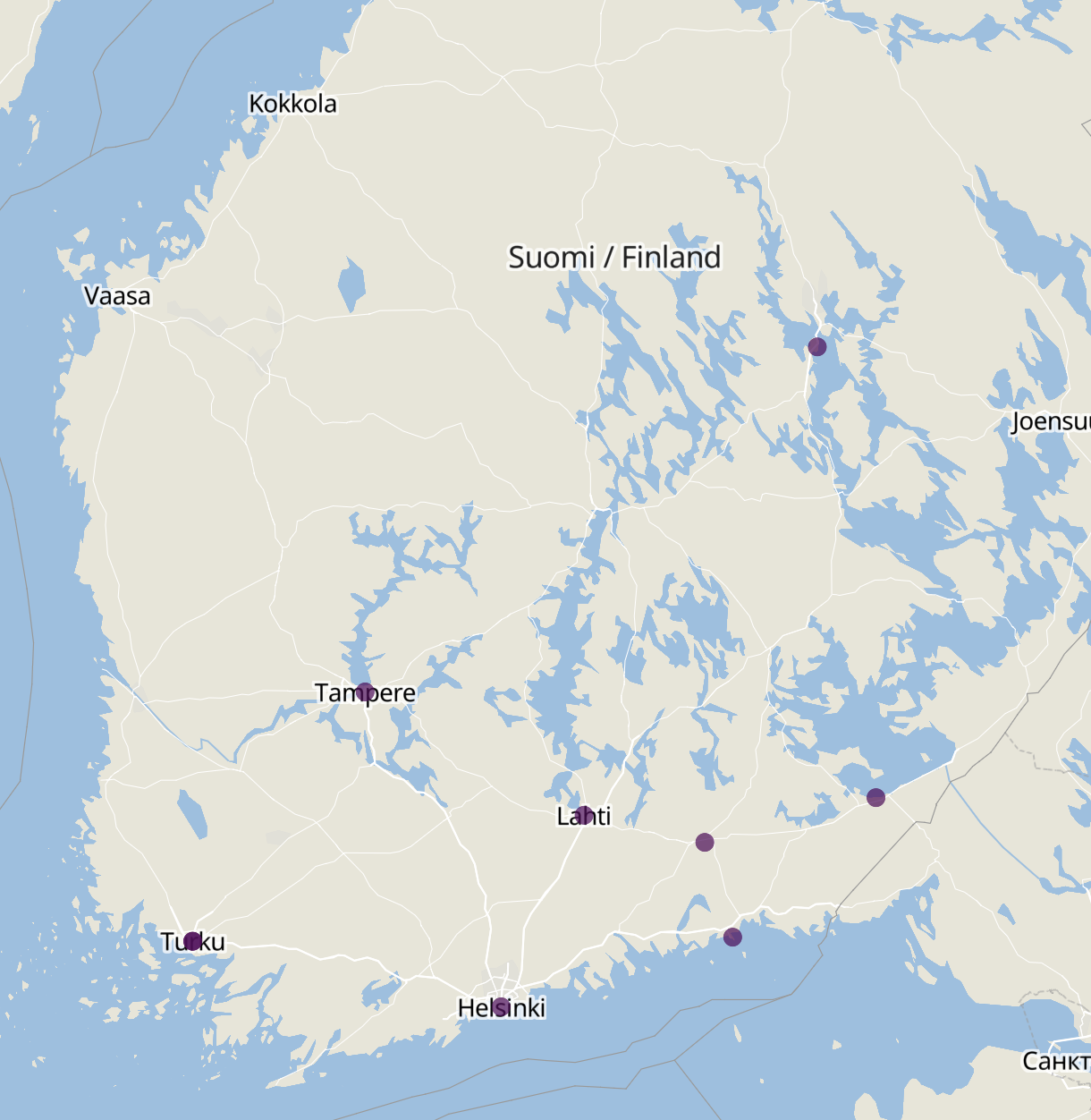

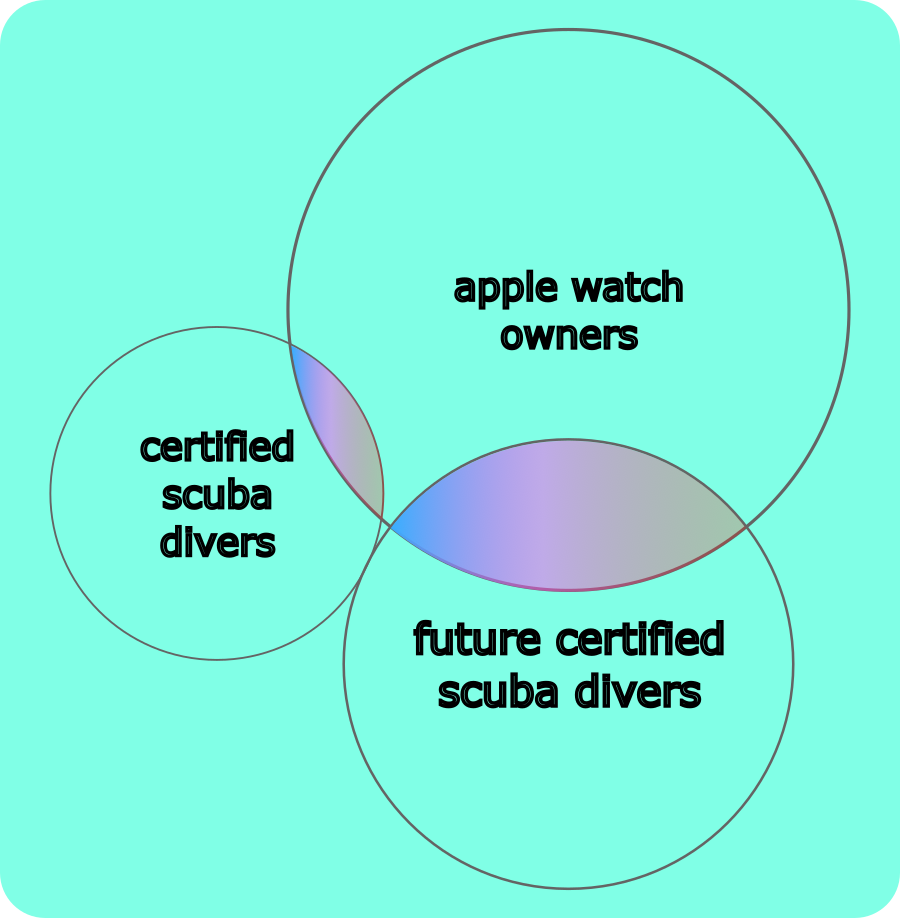

We had a session about integrating GBFS information from different sources and looking at open source projects that provide aggregated feeds, being Citybikes one of them. In the session I got to meet other feed aggregators like https://derp.si/ providing feeds from Slovenia, and https://mobidata-bw.de/ from the Baden-Württemberg state and MobilityData. The discussion was also joined by MOTIS and Transitous, a FOSS routing engine and service. MOTIS supports GBFS, and Transitous is interested in integrating more bike share information feeds on their multi modal routing. We are going to work towards Transitous integrating feeds from our GBFS endpoint for the cases where there’s no official GBFS feed available. Transitous might end up being the de-facto routing service for most FOSS transport apps, so it would be amazing for Citybikes to be part of that.

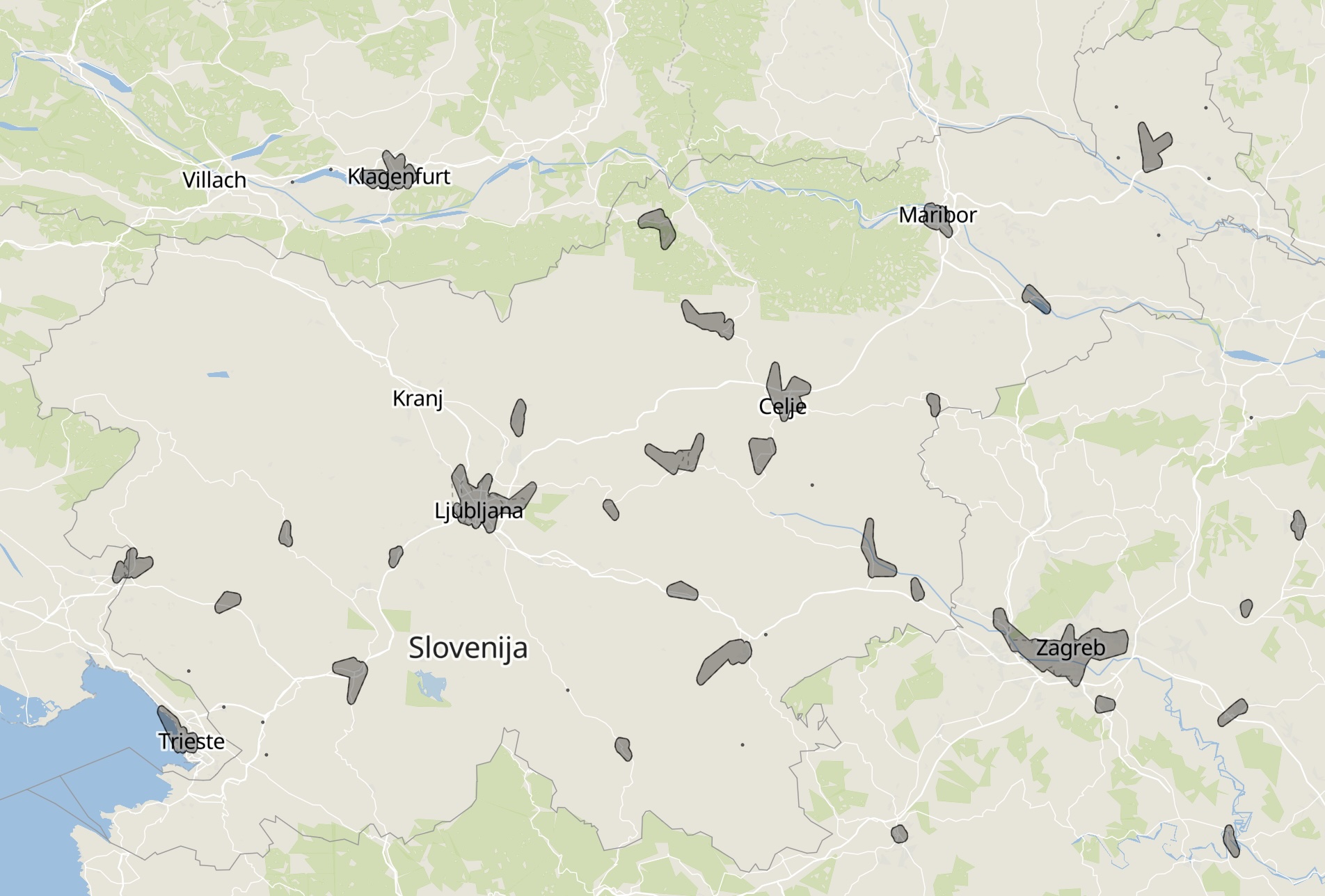

I integrated the feeds from https://gbfs.derp.si/providers in pybikes (#859), meaning we now have pretty good coverage in Slovenia!

Other than that I was very amused to find that Wurstelprater, the amusement park in Prater, has the doors open at night. The first day I arrived late to Vienna, so on my way to find a Würstelstand before going to bed I found myself inside the park, surrounded by shut down attractions, lights, and creepy clown statues. Sneaking in an amusement park was on my todo-bucket list of things I wanted to do, and it turned out there was no sneaking in needed at all!

Duckdb WASM + time-series bike share data demo

During the conference I wanted to show around the historical bike share data Citybikes publishes as parquet files, so I worked a bit more on a demo I have been writing that uses DuckDB-wasm.

The big brain idea here is to build a dashboard that runs entirely on the client using only static assets. Duckdb runs on the client using WebAssembly and can run queries against the parquet files that citybikes hosts.

Check out the demo if you like (the code is hell, first load might take a while to download the duckdb wasm payload. If it fails to load, try again on an incognito). The demo can load any (small) parquet file from Citybikes as a query parameter:

- HelloCycling in Tokyo during October 2025: https://eskerda.com/demos/cb-analytics/?feed=https%3A%2F%2Fdata.citybik.es%2Fdumps%2Fby-network%2F2025%2F202509-hellocycling-tokyo-stats.parquet

- Velib in Paris during October 2025: https://eskerda.com/demos/cb-analytics/?feed=https%3A%2F%2Fdata.citybik.es%2Fdumps%2Fby-network%2F2025%2F202509-velib-stats.parquet

- City Bike in NYC during January 2025: https://eskerda.com/demos/cb-analytics/?feed=https%3A%2F%2Fdata.citybik.es%2Fdumps%2Fby-network%2F2025%2F202501-citi-bike-nyc-stats.parquet

My plan is to integrate these visualizations directly into https://data.citybik.es so parquet files can be explored before downloading. It still has some gotchas though, like the first load and properly catching the wasm payload or a limit on allocated memory, meaning it does not work for any parquet file that allocates more than 1 Gb.

This is all in a preliminary state, but I am really excited that such technology is available. None of this runs with any server process munching data, this is huge!